Data vs. Findings vs. Insights in UX

How data, findings and UX insights are different, and how to argue about statistical significance of your UX research.

In many companies, data, findings and insights are all used interchangibly. Slack conversations circle around convincing data points, statistically significant findings, reliable insights and emerging trends. Unsurprisingly, often conversations mistake sporadic observations for consistent patterns.

But how impactful is the weight that each of them carries? And how do we turn raw data into meaningful insights to make better decisions? Well, let's find out.

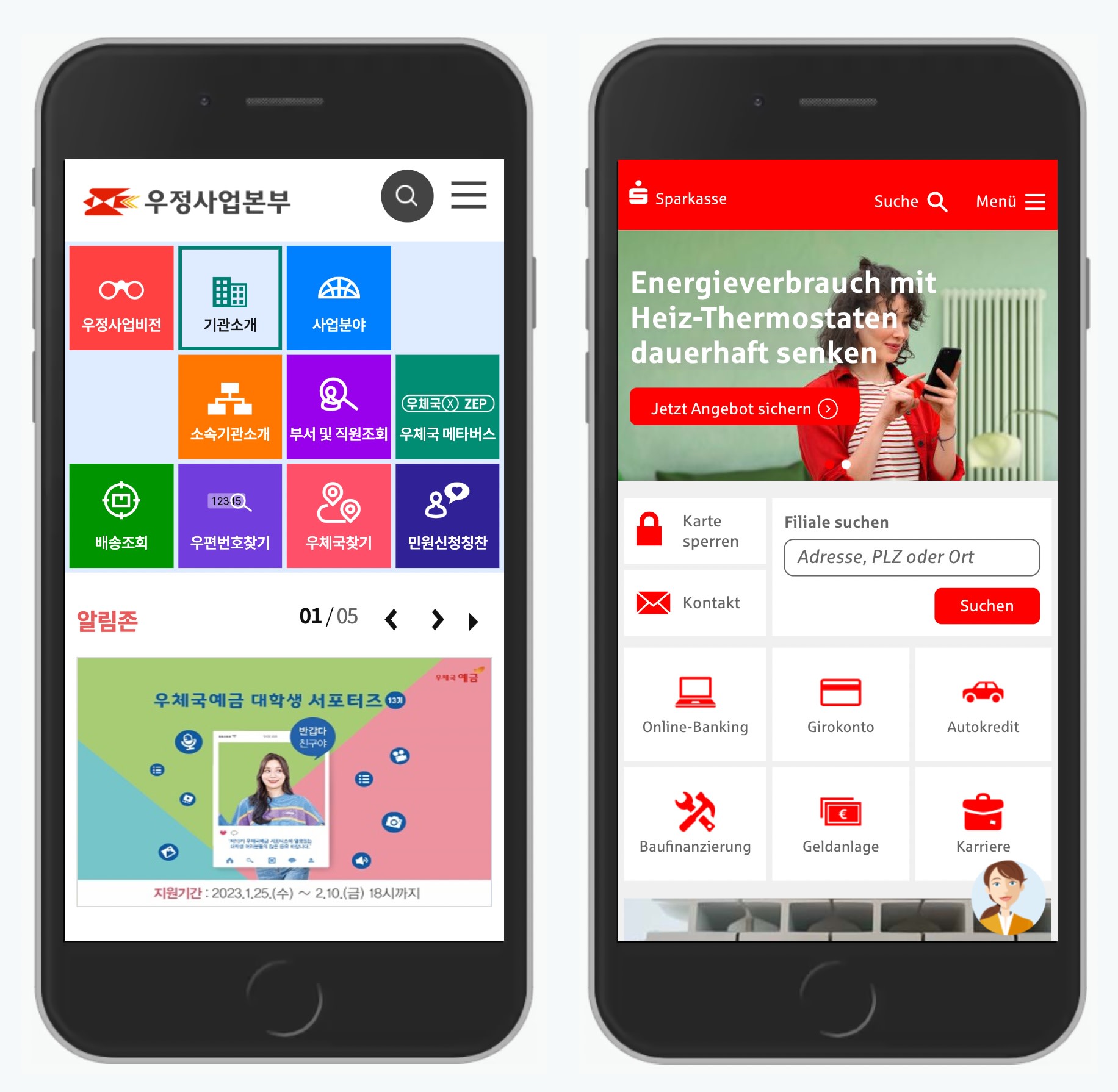

Data ≠ Findings ≠ Insights. Short video by NN/g explains the differences between them.

Why It All Matters #

At first, it might feel like the differences are very nuanced and merely technical. But when we review inputs and communicate outcomes of our UX work, we need to be careful not to conflate terminology — to avoid wrong assumptions, wrong conclusions and early dismissals.

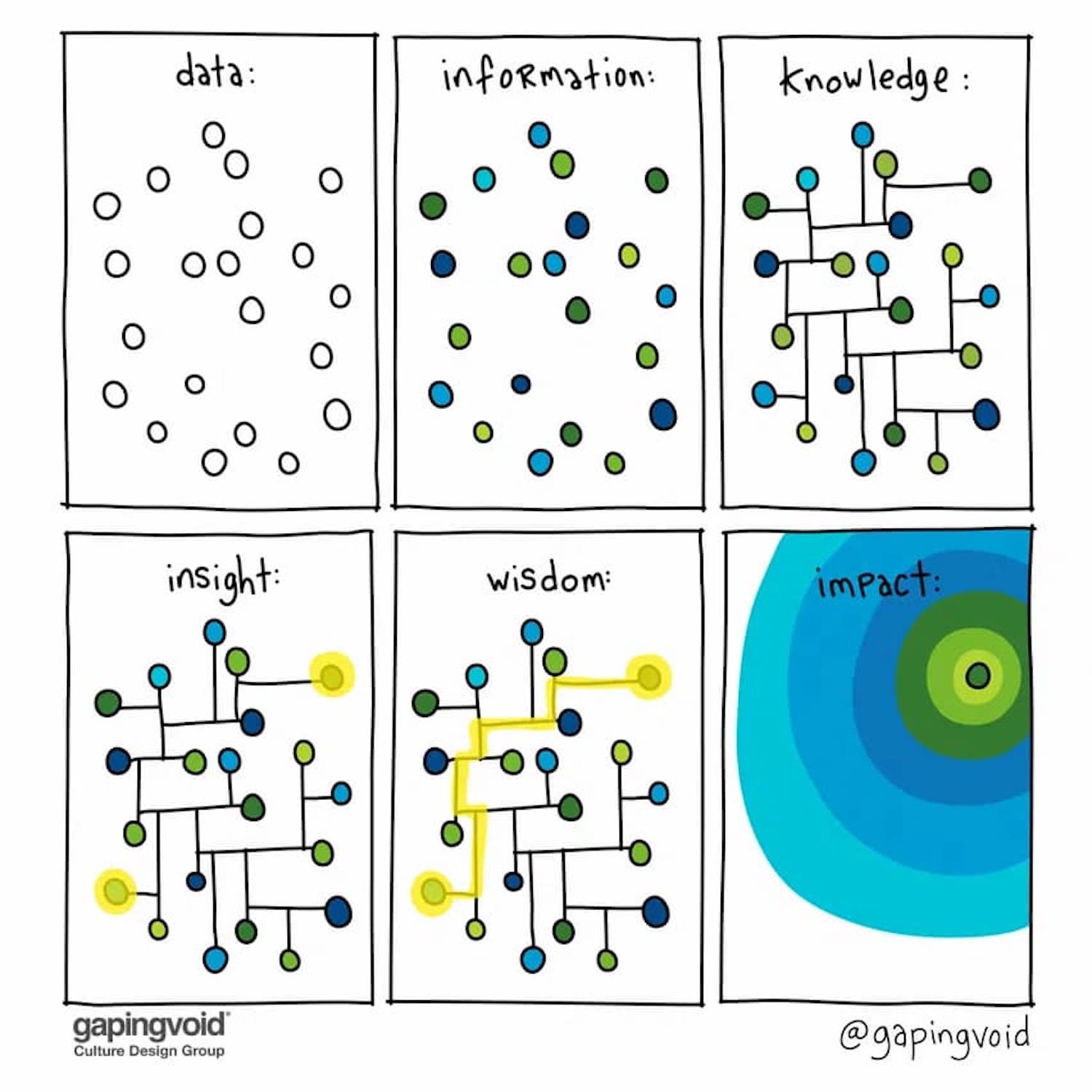

Raw data points is random and inconclusive. For it to be valuable, it must be turned into insights. Cartoon by Hugh MacLeod.

When strong recommendations and bold statements emerge in a big meeting, inevitably there will be people questioning decision making process. More often than not, they will be the loudest voices in the room, often with their own agenda and priorities that they are trying to protect.

As UX designers, we need to be prepared for it. The last thing we want is to have a weak line of thinking, easily dismantled under the premise of “weak research”, “unreliable findings”, “poor choice of users” — and hence dismissed straight away.

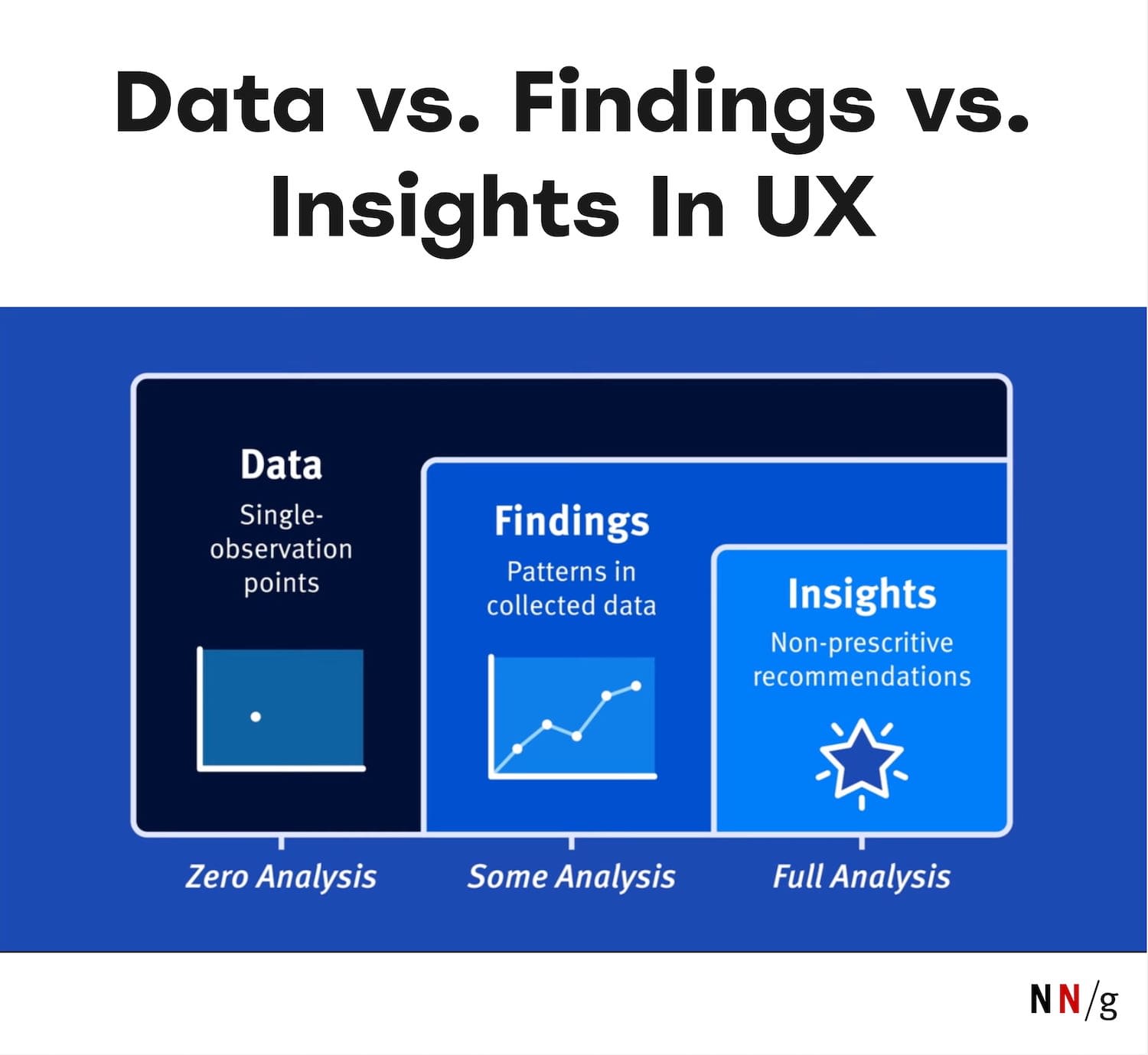

Data ≠ Findings ≠ Insights #

People with different roles — analysts, data scientists, researchers, strategists — often rely on fine distinctions to make their decisions. The general difference is easy to put together:

- Data is raw observations (logs, notes, survey answers) (what was recorded).

- Findings describe emerging patterns in data, but aren’t actionable (what happened).

- Insights are business opportunities (what happened + why + so what).

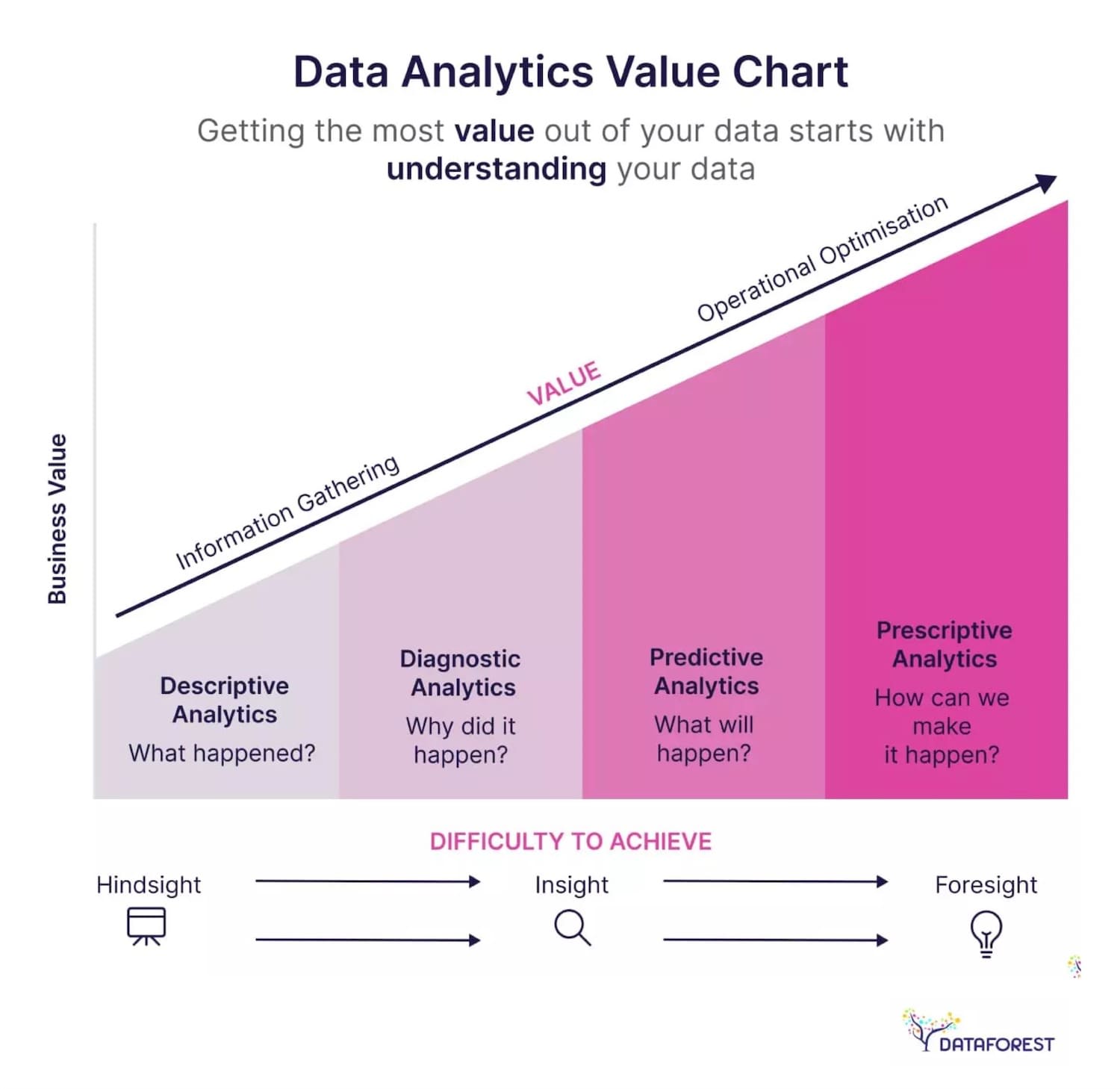

- Hindsights are reflections of past actions and outcomes (what we learned in previous work)

- Foresights are informed projections, insights with extrapolation (what could happen next)

Business value emerges from turning hindsights into insights, then then insights into foresights. From: The Hidden Truth of Business.

Here’s what it then looks like in real-life:

- Data ↓

6 users were looking for ”Money transfer” in “Payments”, and 4 users discovered the feature in their personal dashboard. - Finding ↓

60% of users struggled to find the “Money transfer” feature on a dashboard, often confusing it with the “Payments” section. - Insight ↓

Navigation doesn’t match users’ mental models for money transfer, causing confusion and delays. We recommend to rename sections or reorganize the dashboard to prioritize “Transfer Money”. It could make task completion more intuitive and efficient. - Hindsight ↓

After renaming the section to “Transfer Money” and moving it to the main dashboard, task success increased by 12%. User confusion dropped in follow-up tests. It proved to be an effective solution. - Foresight ↓

As our financial products become more complex, users will expect more simple task-oriented navigation (e.g., “Send Money”, “Pay Bills“) instead of categories like “Payments”. We should evolve the dashboard towards action-driven IA to meet user expectations.

Only insights create understanding and drive strategy. Foresights shape strategy, too, but are always shaped on bets and assumptions. So unsurprisingly, steakholders are interested in insights, not findings. They rarely need to dive into raw data points. But often they do want to make sure that findings are reliable.

That’s when eventually the big question about statistical significance comes along. And that’s when ideas and recommendations often get dismissed without a chance to be explored or explained.

“But Is It Statistically Significant?” #

Now, for UX designers, that’s an incredibly difficult question to answer. As Nikki Anderson pointed out, statistical significance was never designed for qualitative research. And with UX work, we’re not trying to publish academic research or prove universal truths.

What we are trying to do is reach theoretical saturation, the point where additional research doesn’t give us new insights. Research isn’t about proving something is true. It’s about preventing costly mistakes before they happen.

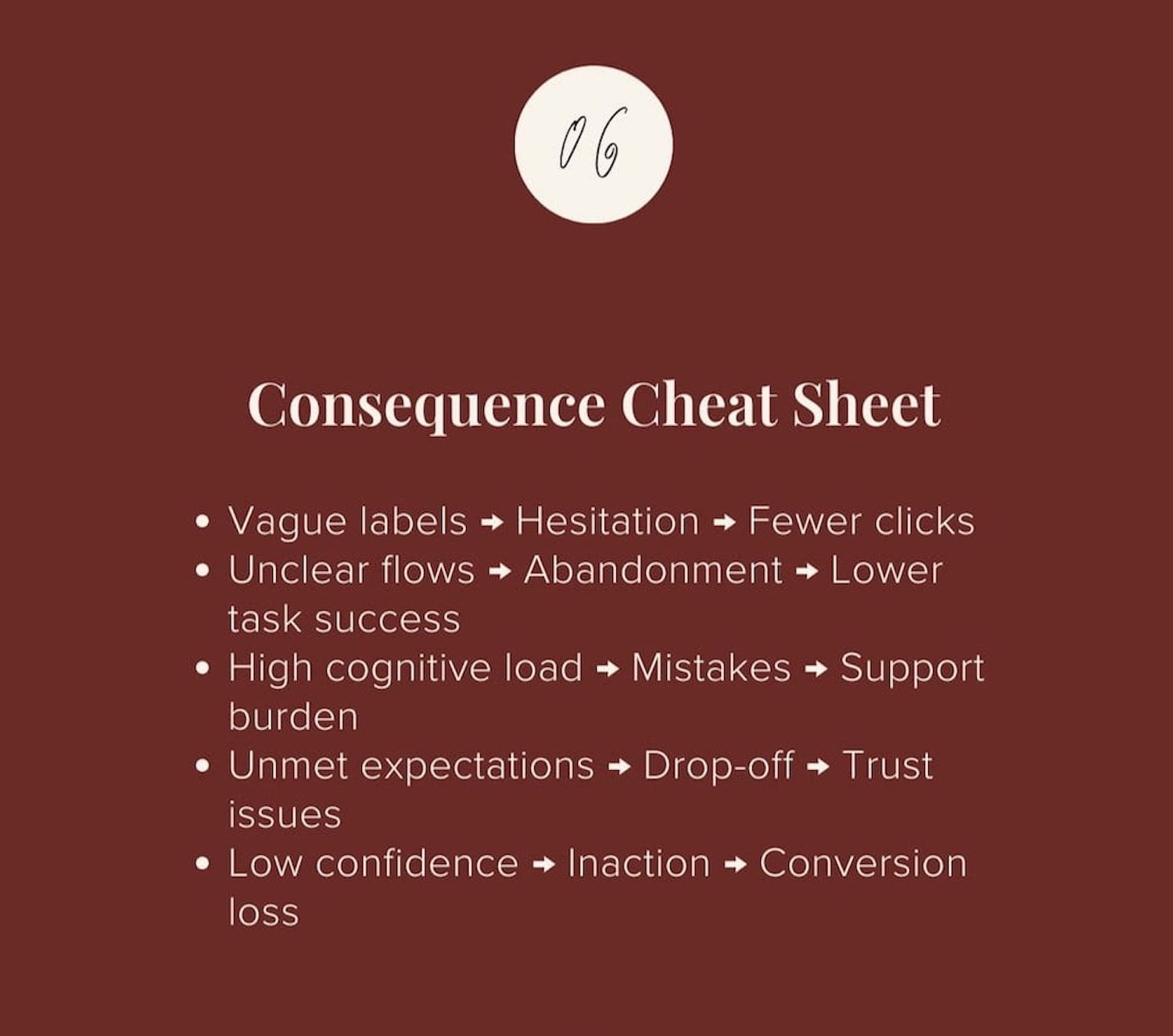

Consequence cheat sheet for turning findings into insights. By Nikki Anderson.

Here are some useful talking points to handle the question:

- 5 users per segment often surface major issues, and 10-15 users per segment usually reach saturation. If we’re still getting new insights after that, our scope is too broad.

- “If 5 people hit the same pothole and wreck their car, how many more do you need before fixing the road?”

- “If 3 enterprise customers say onboarding is confusing, that’s a churn risk.”

- “If 2 usability tests expose a checkout issue, that’s abandoned revenue.”

- “If 1 customer interview reveals a security concern, that’s a crisis waiting to happen.”

- “How many user complaints exactly do we need to take this seriously?”

- “How much revenue exactly are we willing to lose before fixing this issue?”

And: it might not be necessary to focus on the number of participants, but instead, argue about users consistently struggling with a feature, mismatch of expectations and a clear pattern emerging around a particular pain point.

How To Turn Findings In Insights #

Once we notice patterns emerging, we need to turn them into actionable recommendations. Surprisingly, this isn’t always easy — we need to avoid easy guesses and assumptions as far as possible, as they will invite wrong conclusions.

To do that, you can rely on a very simple but effective framework to turn findings in insights: What Happened + Why + So What:

- “What happened” covers observed behavior and patterns.

- “Why” includes beliefs, expectations, or triggers.

- “So What” addresses impact, risk, business opportunity.

To better assess the “so what” part, we should pay close attention to the impact of what we have noticed on desired business outcomes. It can be anything from high-impact blockers and confusion to hesitation and inaction.

I can wholeheartedly recommend to explore Findings → Insights Cheatsheet in Nikki Anderson’s wonderful slide deck, which has examples and prompts to use to turn findings into insights.

Stop Sharing Findings; Deliver Insights #

When presenting the outcomes of your UX work, focus on actionable recommendations and business opportunities, rather than patterns that emerged during testing.

To me, it’s all about telling a good damn story. Memorable, impactful, feasible, convincing. Paint the picture of what the future could look like, and the difference it would produce. That’s where the biggest impact from UX work emerges.

Useful Resources #

- How To Deal With “Is This Statistically Significant?”, by Nikki Anderson

- Data vs. Findings vs. Insights: Differences Explained (+ video), by Sara Paul, NN/g

- Research Findings vs Insights vs Actionable Suggestions, by Debbie Levitt